Roo Code 3.37 Release Notes (2025-12-22)

Roo Code 3.37 introduces experimental custom tools, adds new provider capabilities, and improves tool-call reliability.

New models

Z.ai GLM-4.7 (thinking mode)

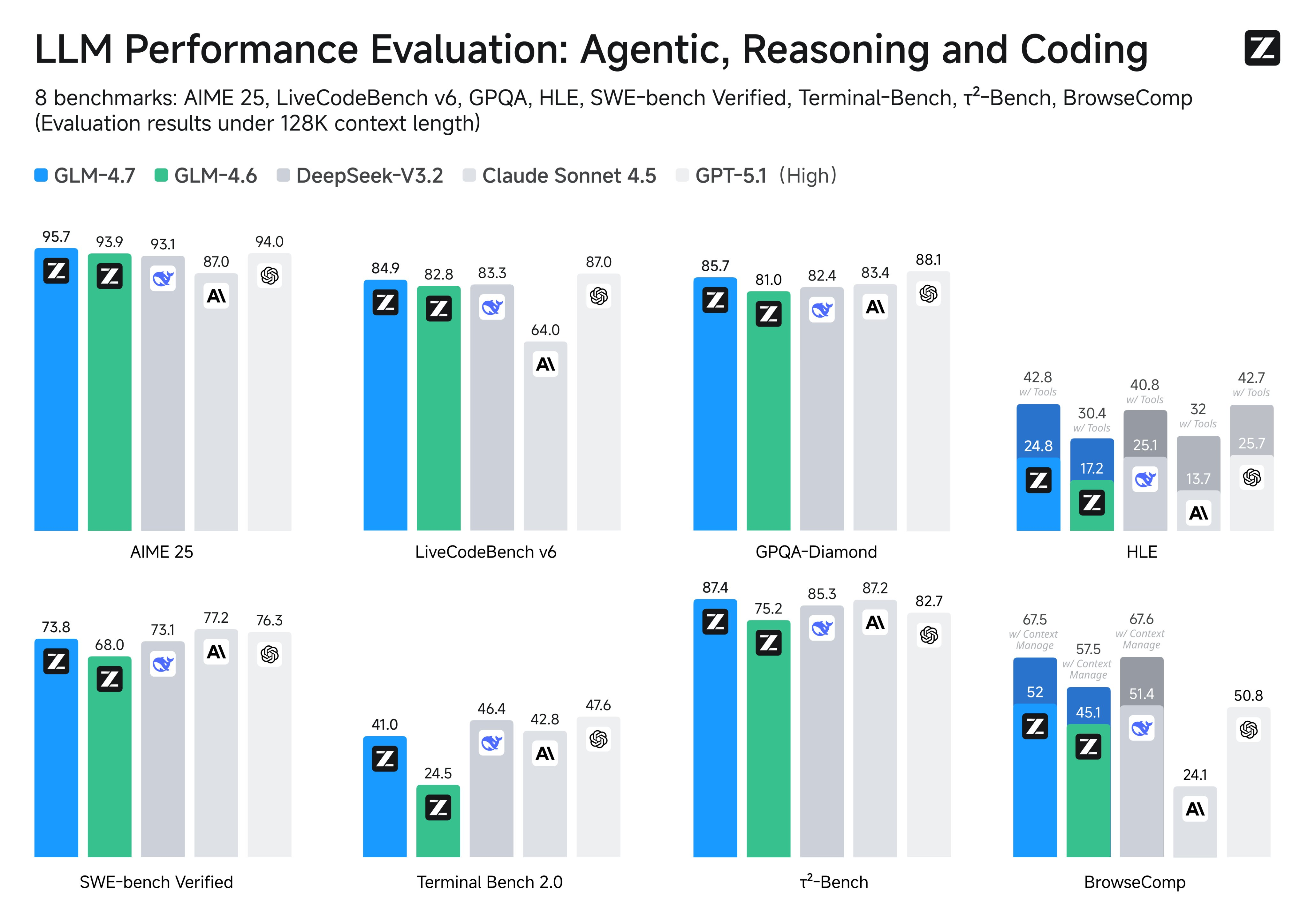

GLM-4.7 is now available directly through the Z.ai provider in Roo Code, as well as via the Roo Code Cloud provider (and other provider routes that surface Z.ai). It’s a strong coding model for agentic workflows, with improved multilingual coding, terminal tasks, tool use, and complex reasoning compared to GLM-4.6 (#10282).

MiniMax M2.1 improvements

MiniMax M2.1 is now available directly through the MiniMax provider in Roo Code, as well as via the Roo Code Cloud provider (and other provider routes that surface MiniMax). It’s a strong pick for agentic coding workflows, with better tool use, instruction following, and long-horizon planning for multi-step tasks—and it’s fast (#10284).

Experimental custom tools

You can now define and use custom tools so Roo can call your project- or team-specific actions like built-in tools. This makes it easier to standardize workflows across a team by shipping tool schemas alongside your project, instead of repeatedly re-prompting the same steps (#10083).

Bug Fixes

- Fixes an issue where Roo could appear stuck after a tool call with some OpenAI-compatible providers when streaming ended at the tool-calls boundary (thanks torxeon!) (#10280)

- Fixes an issue where Roo could appear stuck after a tool call with some OpenAI-compatible providers by ensuring final tool-call completion events are emitted (#10293)

- Fixes an issue where MCP tools could break under strict schema mode when optional parameters were treated as required (#10220)

- Fixes an issue where the built-in

read_filetool could fail on some models due to invalid schema normalization for optional array parameters (#10276) - Fixes an issue where

search_replace/search_and_replacecould miss matches on CRLF files, improving cross-platform search-and-replace reliability (#10288) - Fixes an issue where Requesty’s Refresh Models could leave the model list stale by not including credentials in the refresh flow (thanks requesty-JohnCosta27!) (#10273)

- Fixes an issue where Chutes model loading could fail if the provider returned malformed model entries (#10279)

- Fixes an issue where

reasoning_detailscould be merged/ordered incorrectly during streaming, improving reliability for providers that depend on strict reasoning serialization (#10285) - Fixes an issue where DeepSeek-reasoner could error after condensation if the condensed summary lacked required reasoning fields (#10292)

Misc Improvements

- Cleaner eval logs: Deduplicates repetitive message log entries so eval traces are easier to read (#10286)

QOL Improvements

- New tasks now default to native tool calling on models that support it, reducing the need for manual tool protocol selection (#10281)